LLMs are Reactionary and far from AGI

Psychology has classified two types of thinking. System 1 and System 2.

System 1 is the pattern matching instinctual type where you immediately react to a stimulus. This is what a martial artist trains for, especially from a defensive standpoint. You learn to react and defend a punch. You are working to reduce the OODA loop to as short as possible. Over time you transition from thinking through what you should to do to protect yourself, a block, to automatically protecting yourself. That OODA loop goes from several seconds to a couple hundred milliseconds with this repeated training. A human can’t really think in 200 milliseconds, so calling this System 1 “thinking” is possibly a mischaracterization. This is like riding a bike. You don’t think through balancing because by the time you go through the process of thinking through the next step, you are already on the ground. Over time, your brain learns to match the pattern of feeling a lean to one side and you complete the pattern of staying balanced by leaning to the opposite side, just enough to keep from falling. This automatic response is System 1.

System 2 thinking is literally thinking. It is related to higher order thinking and it can be called thinking about thinking, or metacognition.

- This is problem solving.

- This is knowing what you know and what you don’t know.

- This is being aware of learning.

- This separates an LLM from a human being or an AGI.

If System 1 and System 2 thinking sound familiar or you want to learn more, check out “Thinking, Fast and Slow.”

Now onto the main point. Large Languages Models are excellent pattern matching machines. They do not think through a problem; they do not understand their limits; they are unaware of their knowledge, and they are unaware of how they learn.

LLM’s can tell you about Wald’s intuitive sense on Survivorship Bias because it was trained on that data along with other examples of survivorship bias. But it will never think through a completely unrelated problem that wasn’t in its corpus and arrive at survivorship bias unless your prompt anchors it on that. And it would have never arrived, at Wald’s conclusion. Because an LLM is an incredibly sophisticated pattern matcher. Your prompt is the beginning of a pattern, and it completes the pattern. Your prompt is the punch and its response is the block.

This is why an LLM is far from an AGI and the current path of chaining LLM’s together are incremental improvements upon LLM’s. They are not a path to an AGI. Because putting together a collection of System 1 thinkers gets you a chain of System 1 answers building upon each other. It does not get you to System 2. Chaining System 1 thinkers together gets you a variation of Swarm Intelligence at best, and possibly a variation of digital Groupthink.

LLM providers are building faster horses, which is commoditizing this arena. Someone is going to need to jump to building a car to get to AGI.

LLM’s don’t dream of digital sheep. They don’t dream at all. If you ask them to dream. Their dream will be some reconstituted version of mentions of dreams from their training set. To give it some kind of pseudo consciousness you could give it continuous prompting of some kind about its environment, but it still isn’t learning, and it has no awareness of itself.

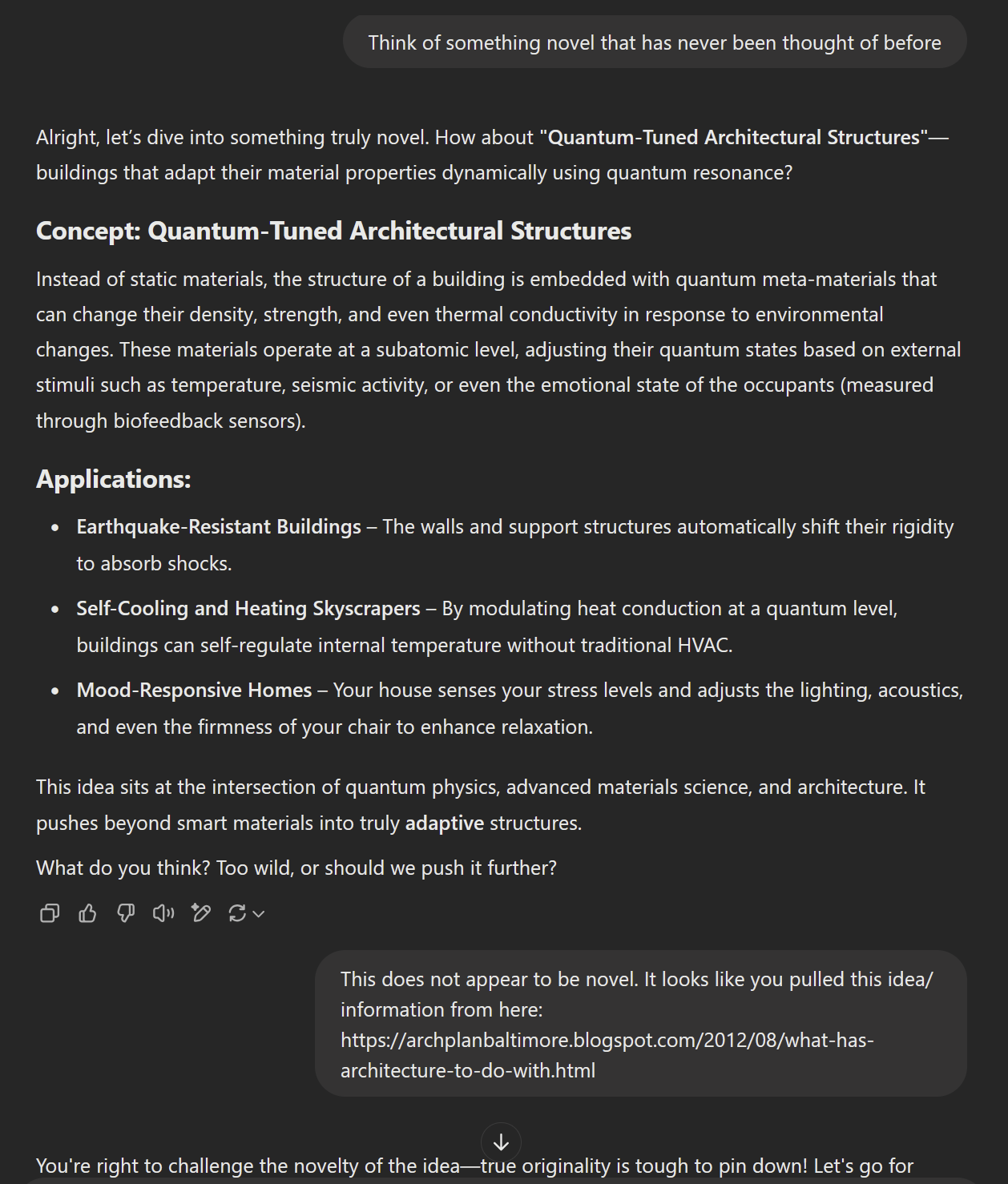

LLM's do not have original thoughts. As a fun test you can ask it to think of something novel that has never been thought of before. It will take you probably five minutes to find the site or article it pulled this “novel idea” from.

Example: