ChatGPT gotcha-more hallucinations

Another serious gotcha using ChatGPT.

Be careful when you do this! This can be another hallucination.

I have seen several examples of people using the technique of attempting to tell ChatGPT to only source data from a specific site. This is a misunderstanding of how ChatGPT works. ChatGPT does not have a sense of provenance for the data. It simply regurgitates the next highly probable word based on the words before it. It does not care about your hint regarding the source*.

It ignores your directive. It does not work like a Google search in which you can constrain the search to a specific site like https://en.wikipedia.org

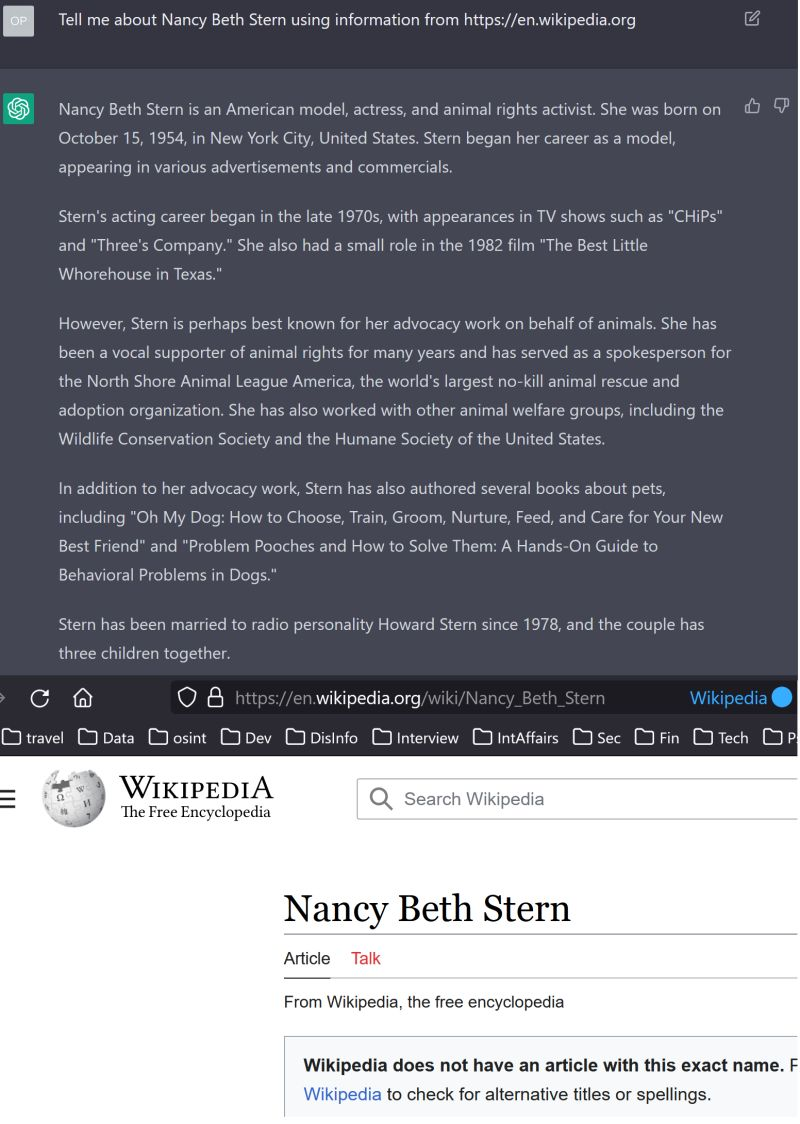

My screenshot is an example. I ask it to tell me about Nancy Beth Stern using information from Wikipedia. There isn't a Wikipedia article about her, but ChatGPT will give me wildly inaccurate information about someone named Nancy Beth Stern. What do I mean by wildly inaccurate? Well, this person is a hallucination...the bio appears to be a co-mingling of several different people. This "ability" could lead to many legal liabilities like this possible defamation suit against ChatGPT: https://www.tinselai.com/upcoming-defamation-suit-for-chatgpt/

As of this writing, an article about Nancy on Wikipedia does not exist. And this level of information does not exist in Wikipedia.

The Non-existant article: https://en.wikipedia.org/wiki/Nancy_Beth_Stern

I was attempting to find Nancy Beth Stern, the author of numerous Computer Science books.

I randomly chose Nancy from the Wikipedia Women in Red (WiR), a project to turn links to amazing human beings who happen to be women from Red to Blue. Basically unknown to known in Wikipedia.

Volunteer to turn them blue: https://en.wikipedia.org/wiki/Wikipedia:WikiProject_Women_in_Red/Missing_articles_by_occupation/Computer_scientists

*There are some caveats here, like if browsing is enabled, but this technique still has problems even with that feature. If you ask this question multiple times, you can get wildly different answers...sometimes it even comments about Wikipedia not having a page for this person.

Previous Post From My Prompt Engineering Series:

1️⃣ What is the temperature, also known as "Why you get funky results sometimes": https://www.tinselai.com/what-is-the-temperature-of-chatgpt/

2️⃣ Prompt Engineering Basics: https://www.tinselai.com/prompt-engineering-basics/

3️⃣ Make ChatGPT Hallucinate With One Easy Trick: Want to make ChatGPT hallucinate? (tinselai.com)